Why SVG is Crucially Important in the LLM Era

We're living through a remarkable moment in technology history. Large Language Models have transformed how we write code, answer questions, and create content. But there's a quiet revolution happening at the intersection of AI and graphics — and SVG stands at its center. While SVG is powerful, sometimes you need a raster format, which is where our Free SVG to Image Converter comes in handy.

While most discussions about AI-generated images focus on photorealistic outputs from models like DALL-E and Midjourney, a more fundamental shift is occurring. SVG, a format that's been around since 1999, is emerging as the perfect bridge between human intent and machine-generated visuals. This isn't a coincidence — it's a consequence of SVG's unique architecture that makes it fundamentally compatible with how language models think and generate.

> The Perfect Storm: When Text Meets Graphics

Why LLMs and SVG Speak the Same Language

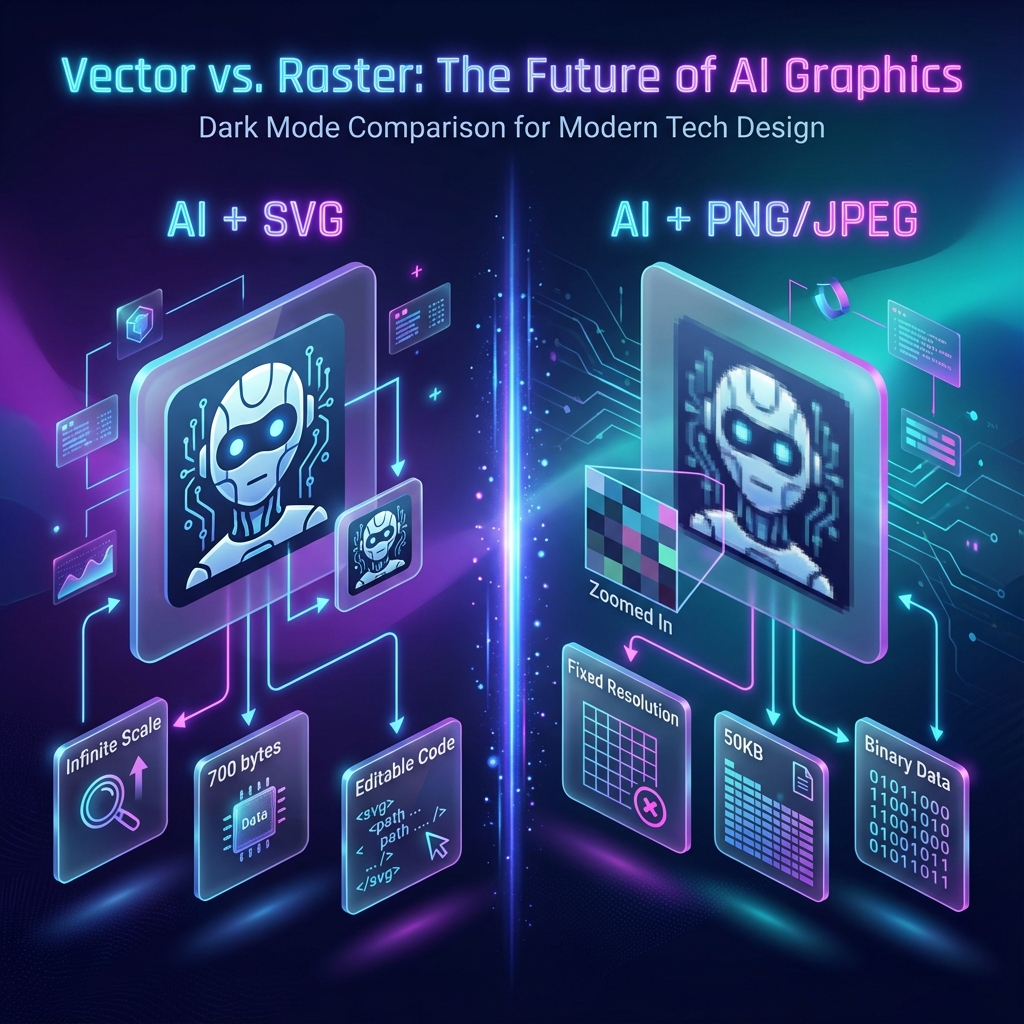

Here's a simple truth that changes everything: SVG is text. Not binary data, not compressed pixels, but human-readable XML that describes shapes, colors, and positions through words and numbers.

Consider this SVG code that an LLM can both read and write:

<svg width="200" height="200" xmlns="http://www.w3.org/2000/svg">

<circle cx="100" cy="100" r="80" fill="#4F46E5" />

<text x="100" y="105" text-anchor="middle" fill="white" font-size="16">

AI Generated

</text>

</svg>

When you ask ChatGPT or Claude to "create a purple circle with centered text," this is exactly what gets generated. No image processing pipeline, no pixel manipulation, no external rendering service — just text that becomes a perfect vector graphic.

This text-based nature creates a powerful feedback loop. You can prompt an AI to generate SVG, see the result, then refine your request in natural language: "Make the circle smaller and change the text to bold." The AI understands both parts of that instruction because it's all happening in its native domain — language.

The Mathematics of Infinity

The second pillar of SVG's importance lies in its vector architecture. When an LLM generates a raster image, it's creating a fixed grid of pixels — typically 1024×1024 or similar. That image exists at one resolution, and scaling it up means either accepting blur or running it through another AI upscaler.

SVG operates differently. The code <circle cx="100" cy="100" r="50"/> doesn't describe 314 pixels arranged in a circular pattern. It describes the mathematical concept of a circle: a center point and a radius. This description renders perfectly whether displayed on a smartwatch or a billboard.

For AI applications, this distinction matters enormously. A single AI-generated SVG icon can serve every resolution your application might ever need. No image variants, no responsive image sets, no storage multiplication — just one piece of text that adapts to any context. Of course, when raster formats are required, using a high-quality SVG to Image Converter ensures you maintain that mathematical precision.

> The Raster Trap: Why Current AI Image Generation Has Limits

The Hidden Costs of Pixels

Today's most celebrated AI image generators — DALL-E 3, Midjourney v6, Stable Diffusion XL — share a common limitation: they output raster images. This creates several downstream challenges that often go unexamined.

Consider file size. A typical AI-generated PNG might weigh 500KB to 2MB. The equivalent content expressed as SVG often measures 5-20KB — a reduction of 95% or more. For web applications serving millions of requests, this difference translates directly to bandwidth costs, load times, and carbon footprint.

Editability presents another challenge. Want to change the color of an element in a Midjourney output? You'll need Photoshop and careful selection work, or another generation entirely. In SVG, it's a single attribute change: fill="#FF0000" becomes fill="#00FF00". An LLM can make this modification instantly based on natural language instruction.

Then there's the resolution problem. Generate an image at 1024×1024 and later discover you need it for a 4K display? You're either regenerating, upscaling with quality loss, or accepting pixelation. SVG simply doesn't have this problem — it renders crisply at any scale because it stores mathematical descriptions, not fixed samples.

Where Text-to-Image Models Struggle

Current diffusion models excel at photorealism but struggle with precision. Ask for "a bar chart showing sales of 100, 150, 200 for Q1, Q2, Q3" and you'll get something that looks vaguely like a chart but with imprecise values, inconsistent spacing, and probably illegible text.

LLMs generating SVG handle this differently. Because they're producing structured code, they can express exact values:

<rect x="50" y="200" width="40" height="100" fill="#3B82F6"/> <!-- Q1: 100 -->

<rect x="110" y="150" width="40" height="150" fill="#3B82F6"/> <!-- Q2: 150 -->

<rect x="170" y="100" width="40" height="200" fill="#3B82F6"/> <!-- Q3: 200 -->

The mathematical precision of SVG aligns perfectly with the text-generation capabilities of LLMs, creating outputs that are both accurate and infinitely scalable.

> Real Applications Emerging Today

Dynamic UI Generation

The most immediate application of LLM-generated SVG is in user interface creation. Imagine describing a component in natural language and receiving production-ready code:

// From prompt: "Create a circular progress indicator at 75% with a gradient"

const progressRing = await ai.generateSVG({

prompt: "Circular progress ring showing 75% completion",

style: "modern, gradient fill from blue to purple",

size: { width: 120, height: 120 }

});

// Returns valid SVG with proper stroke-dasharray calculations

Companies are already building design systems where non-designers can request interface elements through conversation. The SVG output integrates directly into React, Vue, or vanilla HTML without any conversion step.

Instant Data Visualization

Business intelligence is being transformed by the SVG-LLM combination. Instead of learning complex charting libraries, analysts can describe what they want to see:

def visualize_quarterly_data(data: dict, request: str) -> str:

"""

Generate SVG visualization from natural language request.

Example: "Show me a comparison of this quarter vs last,

highlight the growth areas in green"

"""

prompt = f"""

Create an SVG chart for this data: {data}

Visualization request: {request}

Output clean, accessible SVG with proper ARIA labels.

"""

return llm.generate(prompt)

The resulting visualizations aren't just images — they're accessible, searchable, and can be further refined through additional prompts.

Generative Brand Assets

Marketing teams are discovering they can maintain brand consistency while rapidly producing variations. Need a social media graphic for a flash sale? Describe it once, generate SVG, then easily modify colors, text, and dimensions for different platforms — all through conversation with an AI that understands both your brand guidelines and the SVG format.

> The Technical Synergy: Why This Works So Well

Structured Data Meets Structured Output

LLMs excel at generating structured text — JSON, XML, code. SVG is XML. This isn't a hack or a workaround; it's a natural alignment of capabilities.

When an LLM generates SVG, it's applying the same pattern-matching and completion mechanisms it uses for any code generation. The training data includes millions of SVG examples from the open web, teaching the model the vocabulary of circles, rectangles, paths, and gradients.

Semantic Elements, Semantic Understanding

SVG's element names carry meaning that LLMs can leverage. <circle> means circle. <rect> means rectangle. <text> contains readable content. This semantic clarity helps LLMs generate appropriate structures for described visuals.

Compare this to generating images through latent diffusion, where the model must learn abstract representations of visual concepts. With SVG, the concepts are explicit in the syntax itself.

The Edit-Refine Loop

Perhaps SVG's greatest advantage for AI applications is the refinement workflow it enables. Generated output not quite right? You can:

- Ask the AI to modify specific attributes

- Manually tweak values in the SVG code

- Extract components and regenerate only what needs changing

- Combine outputs from multiple generation attempts

This iterative improvement process mirrors how humans naturally work with creative outputs — rough draft, feedback, revision. Raster images don't support this workflow nearly as well.

> Looking Forward: The Trajectory of AI Graphics

Near-Term: Component Libraries (2024-2025)

We're already seeing the emergence of AI-powered SVG component libraries. These systems understand design tokens, accessibility requirements, and responsive behavior. Feed them a design system specification and they generate consistent, on-brand SVG components on demand.

Medium-Term: Full Design Systems (2025-2026)

The next evolution connects individual components into coherent systems. LLMs will generate not just icons or charts, but complete visual languages — color relationships, spacing scales, animation patterns — all expressed as SVG with accompanying CSS and JavaScript.

Longer-Term: Autonomous Design Evolution (2027+)

Eventually, AI systems may monitor how users interact with generated designs and autonomously propose improvements. A/B testing becomes conversational: "Users seem to click this button more when it's larger and has a subtle shadow effect."

> Practical Guidance for Developers

Writing Effective Prompts

The quality of AI-generated SVG depends heavily on prompt construction. Effective prompts include:

Specific dimensions and constraints: "Create an icon that works at 24x24 and 48x48 pixels" produces better results than "make a small icon."

Style references: "Minimal line icon style, 2px stroke, rounded corners" gives the model clear aesthetic direction.

Accessibility requirements: "Include appropriate ARIA labels and title elements" ensures usable output.

Technical constraints: "Use only path elements for compatibility" or "Avoid filters for better mobile performance" shapes the generation appropriately.

Validation and Optimization

AI-generated SVG should be treated like any generated code — trust but verify. Useful practices include:

Running SVGO or similar optimization to reduce file size and clean up unnecessary attributes. AI outputs tend to be verbose.

Validating against SVG specifications to catch structural issues before they cause rendering problems.

Testing across browsers since some SVG features have inconsistent support.

Checking accessibility with screen readers and automated tools.

Integration Patterns

For production systems, consider wrapping AI generation in structured interfaces:

interface SVGGenerationRequest {

description: string;

dimensions: { width: number; height: number };

style?: 'minimal' | 'detailed' | 'outlined';

colorScheme?: string[];

accessibility?: {

title: string;

description: string;

};

}

async function generateAndValidateSVG(

request: SVGGenerationRequest

): Promise<ValidatedSVG> {

const raw = await llm.generate(buildPrompt(request));

const optimized = await optimize(raw);

const validated = await validate(optimized);

return validated;

}

This pattern ensures consistent quality while leveraging AI's generative capabilities.

> Conclusion: A New Creative Partnership

The convergence of SVG and LLMs represents something genuinely new — not just in what's technically possible, but in who can participate in graphic creation. When a product manager can describe a chart and receive valid, optimized, accessible SVG, the traditional handoff to designers or developers becomes optional rather than required.

This doesn't diminish the value of skilled designers. Instead, it changes their role toward higher-level creative direction and quality assurance, while routine visual production becomes conversational. The designers who embrace this shift will find themselves amplified rather than replaced.

For developers, SVG-LLM integration offers a path to solving visual problems without leaving the text-based world where we're most comfortable. The ability to generate, modify, and reason about graphics through code and natural language together opens possibilities that neither modality offers alone.

We're early in this transformation. The tools are rough, the outputs sometimes require manual cleanup, and the integration patterns are still evolving. But the fundamental alignment between SVG's architecture and LLM's capabilities suggests this is more than a passing trend. It's a new foundation for how we'll create visual content in the AI era.

Exploring the intersection of vector graphics and artificial intelligence — this guide was created by the SVG2IMG team to help developers and designers navigate the emerging landscape of AI-generated graphics. Have questions or insights to share? We'd love to hear from you.